Let me start with a scene I’ve lived through too many times.

A data scientist walks into the office with that excited “I trained a new model last night” energy. They open a notebook, run a few cells, show a screenshot of 92% accuracy, and everyone claps like this will somehow replace five backend services by Friday.

Fast forward two weeks.

The notebook only runs on their machine.

The dataset used for training can’t be found.

The bucket name hardcoded in the script doesn’t exist anymore.

The model performs differently in staging than it did on their laptop.

And nobody knows why.

Welcome to ML engineering in most companies.

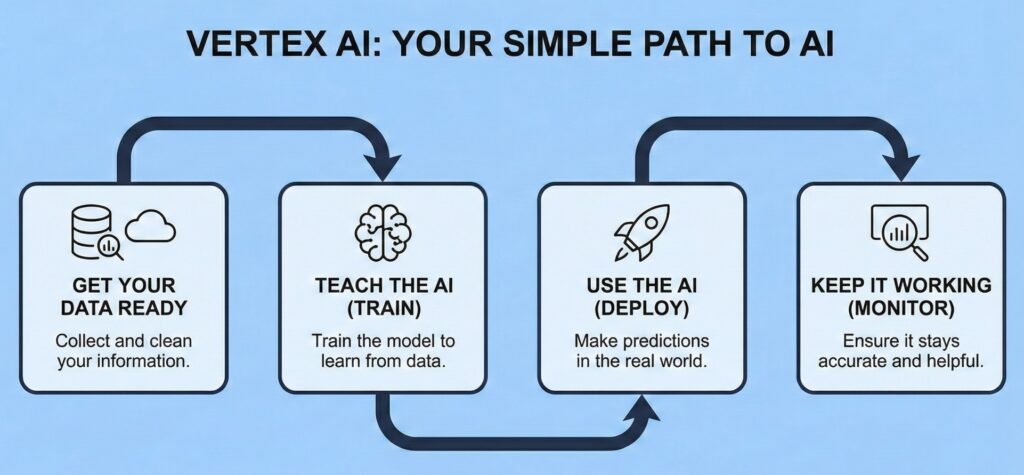

This is the exact mess Vertex AI tries to eliminate—not by being magical, but by enforcing discipline without forcing you to build an entire platform from scratch.

It’s the thing you deploy when you’re tired of being the janitor for everyone’s ML experiments

What Vertex AI Looks Like From the Trenches

Imagine you’re running an ML project without Vertex:

- One engineer is hoarding GPU VMs like real estate.

- Someone is manually exporting CSVs into buckets.

- Pipelines exist only in Slack messages.

- Model “versions” are ZIP files in someone’s downloads folder.

- Compliance starts sending emails like “Who authorized this PII export?”

Now swap all that chaos with a single, coherent environment where:

- Data is versioned.

- Models have a registry.

- Training jobs are reproducible.

- Serving endpoints scale without crying.

- Pipelines don’t break when someone goes on vacation.

- IAM actually means something.

That’s Vertex AI.

Not flashy. But reliable in the way good infrastructure should be.

Why Vertex AI Exists (The Real Problems It Solves)

1. The Workflow Spaghetti Problem

Before Vertex, ML workflows usually evolve like this:

- Start as a single notebook.

- Grow into multiple notebooks.

- Then Bash scripts.

- Then a random VM.

- Then someone forks the scripts.

- Then nobody knows which version is the “real one.”

Vertex centralizes:

- Training

- Datasets

- Tuning

- Pipelines

- Deployment

- Registry

Meaning you stop relying on tribal knowledge and Slack archaeology.

2. Infrastructure Whack-A-Mole

Here’s a classic ML engineer day:

- Spin up a GPU VM

- Install CUDA

- Install drivers

- Break TensorFlow

- Reinstall CUDA

- Cry

Managed training in Vertex kills that nonsense. You specify:

- Machine type

- Accelerator

- Container image

And it just runs. Same environment. Every time.

3. Model Serving Without Homemade Surgery

Teams love to ignore serving until the last minute. Then suddenly:

- Traffic spikes

- Latency tanks

- Costs rise

- Rollbacks fail

Vertex AI Endpoints handle:

- Autoscaling

- Canary releases

- Versioning

- Logging and metrics

No fragile Flask apps duct-taped behind a Load Balancer.

4. Security That Survives an Audit

ML systems are notorious for being security disasters:

- Over-permissive service accounts

- Public notebooks

- Buckets with wildcard access

- Datasets copied to unknown regions

Vertex wraps ML inside proper enterprise controls:

- VPC-SC

- CMEK

- Dataset-level IAM

- Private Service Connect

- Lineage and audit trails

So you don’t fail audits you didn’t know were happening.

Managed Datasets: Where the Real Discipline Begins

Let’s be real: most ML data handling is a crime scene.

You’ll find:

- CSVs named “data_final_v4_REAL.csv”

- Buckets with no folder structure

- Untracked schema changes

- PII inside training files

- No version history whatsoever

Vertex AI Managed Datasets drag teams out of that mess.

What “Managed” Actually Means

A Managed Dataset gives you:

- Automatic versioning

- Lineage tracking

- Schema enforcement

- Access control

- Integrated labeling tools

- Compatibility with BigQuery

Suddenly:

- You know which dataset trained which model.

- You know who changed what and when.

- You know if data drifted.

- You know if someone accidentally uploaded sensitive data.

Why It Matters in the Real World

Without dataset management, ML becomes:

- Non-repeatable

- Non-trustable

- Non-compliant

With Managed Datasets, you stop guessing and start knowing

Pipelines: The Only Way to Stay Sane

You can’t build a real ML system on manual notebook runs. Not unless you enjoy pain.

Vertex AI Pipelines give you:

- Step-by-step containers

- Artifact tracking

- Reproducible executions

- Automated workflows

- Integration with training, datasets, and registry

This turns ML from a hobby project into an engineered system.

A pipeline doesn’t take a sick day.

A pipeline doesn’t forget to run.

A pipeline doesn’t push the wrong model to prod.

People do.

Scripts do.

Pipelines don’t.

Training Jobs: No More VM Snowflakes

Training runs in Vertex AI:

- Isolated

- Reproducible

- Logged

- Autoscaled

- Retry-safe

- GPU/TPU enabled

You can even bring your own container if you don’t trust defaults.

The key outcome?

Your training environment finally stops changing every time someone updates pip.

The Ugly Truth About Data Exfiltration

Every ML platform has escape routes.

Most teams discover them after the damage is done.

Typical exfiltration paths:

- Public notebooks accessing private datasets

- Over-privileged service accounts

- Data being exported to public buckets

- Training code writing artifacts outside VPC

- Developers downloading datasets “just for testing”

- Models embedding slices of sensitive data

Vertex gives you tools to shut these doors — but only if you use them.

Controls That Actually Stop Leaks

- VPC Service Controls (your real perimeter)

- CMEK everywhere

- Notebook internet egress disabled

- Highly restrictive workload identity

- Dataset-level permissions

- Private Service Connect for endpoints

- Artifact Registry restricted to internal networks

- DLP scanning pipelines

With these, data doesn’t leak accidentally.

Without them, it absolutely will.

Common Gotchas (You’ll Thank Me Later)

Believing Vertex Replaces ML Expertise

It won’t do your job. It just removes repetitive pain.

Leaving Everything in Notebooks

Notebooks are experiments.

Pipelines are production.

Over-Permissive IAM

If someone can read the dataset, they can steal it.

Use least privilege.

Ignoring BigQuery Until It’s Too Late

If you’re in a SQL-heavy org, make BigQuery part of the design — early.

A Final Thought

Vertex AI won’t make your models smarter.

It won’t make your data scientists more disciplined.

It won’t magically fix badly designed ML systems.

But it will give you the kind of infrastructure backbone you need to survive ML at scale:

- predictable training

- enforceable security

- auditable data

- repeatable pipelines

- real deployment processes

It’s the difference between “we have a cool notebook” and “we run ML in production without fear.”